Measuring Accuracy Using Cross-Validation

•

A good way to evaluate a model is to use cross-validation.

•

Let’s use the cross_val_score() function to

ü evaluate our SGDClassifier model,

·

using K-fold cross-validation with three folds.

•

Remember that K-fold cross-validation means

ü splitting the training set

into K folds (in this case, three), then

·

making predictions and

·

evaluating them on each fold using

ü a model trained on

the remaining folds.

from sklearn.model_selection import cross_val_score

cross_val_score(sgd_clf, X_train, y_train_5, cv=3, scoring="accuracy")

array([0.96355,

0.93795, 0.95615])

ü

Above 93% accuracy (ratio of correct predictions) on all cross-validation

folds?

ü

This looks amazing, doesn’t it?

ü

let’s look at a very dumb classifier that just classifies every single image in the “not-5”

class:

from sklearn.base import BaseEstimator

class Never5Classifier(BaseEstimator):

def fit(self, X, y=None):

return self

def predict(self, X):

return np.zeros((len(X), 1), dtype=bool)

ü

Can you guess this model’s accuracy?

ü

Let’s find out:

never_5_clf = Never5Classifier()

cross_val_score(never_5_clf, X_train, y_train_5, cv=3, scoring="accuracy")

array([0.91125, 0.90855, 0.90915])

·

It has over 90% accuracy!

·

This is simply because

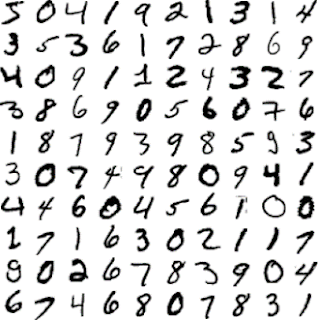

ü only about 10% of the images are 5s,

ü so if you always guess that

an image is not a 5,

•

you will be right about 90% of the time.

•

This demonstrates why accuracy is

•

generally not the preferred performance measure for classifiers,

ü especially

when you are dealing

with skewed datasets

ü i.e.,

when some

classes are much more frequent than others.

•

Implementing Cross-Validation

ü

Occasionally you will need more control over

the

cross-validation process than what Scikit-Learn

provides off the shelf.

ü

In these cases, you can implement cross-validation yourself.