Decision Tree Classifier on Iris Dataset

Steps:

1. Importing the library files

1. Importing the library files

2. Reading the Iris Dataset

3. Preprocessing

4. Split the dataset into training and testing

5. Build the model (Decision Tree Mode

l)

class sklearn.tree.DecisionTreeClassifier(*, criterion='gini', splitter='best', max_depth=None, min_samples_split=2, min_samples_leaf=1, min_weight_fraction_leaf=0.0, max_features=None, random_state=None, max_leaf_nodes=None, min_impurity_decrease=0.0, class_weight=None, ccp_alpha=0.0)Parameters:

criterion{“gini”, “entropy”, “log_loss”}, default=”gini”

The function to measure the quality of a split. Supported criteria are “gini” for the Gini impurity and “log_loss” and “entropy” both for the Shannon information gain

splitter{“best”, “random”}, default=”best”

The strategy used to choose the split at each node. Supported strategies are “best” to choose the best split and “random” to choose the best random split.

max_depthint, default=None

The maximum depth of the tree. If None, then nodes are expanded until all leaves are pure or until all leaves contain less than min_samples_split samples.

min_samples_splitint or float, default=2

The minimum number of samples required to split an internal node:

min_samples_leafint or float, default=1

The minimum number of samples required to be at a leaf node. A split point at any depth will only be considered if it leaves at least

min_samples_leaf training samples in each of the left and right branches. This may have the effect of smoothing the model, especially in regression.min_weight_fraction_leaffloat, default=0.0

The minimum weighted fraction of the sum total of weights (of all the input samples) required to be at a leaf node. Samples have equal weight when sample_weight is not provided.

max_featuresint, float or {“auto”, “sqrt”, “log2”}, default=None

The number of features to consider when looking for the best split:

If int, then consider

max_features features at each split.If float, then

max_features is a fraction and max(1, int(max_features * n_features_in_)) features are considered at each split.If “auto”, then

max_features=sqrt(n_features).If “sqrt”, then

max_features=sqrt(n_features).If “log2”, then

max_features=log2(n_features).If None, then

max_features=n_features.random_stateint, RandomState instance or None, default=None

max_leaf_nodesint, default=None

Grow a tree with

max_leaf_nodes in best-first fashion. Best nodes are defined as relative reduction in impurity. If None then unlimited number of leaf nodes.min_impurity_decreasefloat, default=0.0

A node will be split if this split induces a decrease of the impurity greater than or equal to this value.

ccp_alphanon-negative float, default=0.0

6. Evaluate the performance of the Model

7. Visualize the model

7.1 Visualize the Decision Tree on Training Data

|--- feature_2 <= 2.45

| |--- class: 0

|--- feature_2 > 2.45

| |--- feature_3 <= 1.65

| | |--- feature_2 <= 4.95

| | | |--- class: 1

| | |--- feature_2 > 4.95

| | | |--- feature_3 <= 1.55

| | | | |--- class: 2

| | | |--- feature_3 > 1.55

| | | | |--- class: 1

| |--- feature_3 > 1.65

| | |--- feature_2 <= 4.85

| | | |--- feature_1 <= 3.10

| | | | |--- class: 2

| | | |--- feature_1 > 3.10

| | | | |--- class: 1

| | |--- feature_2 > 4.85

| | | |--- class: 2

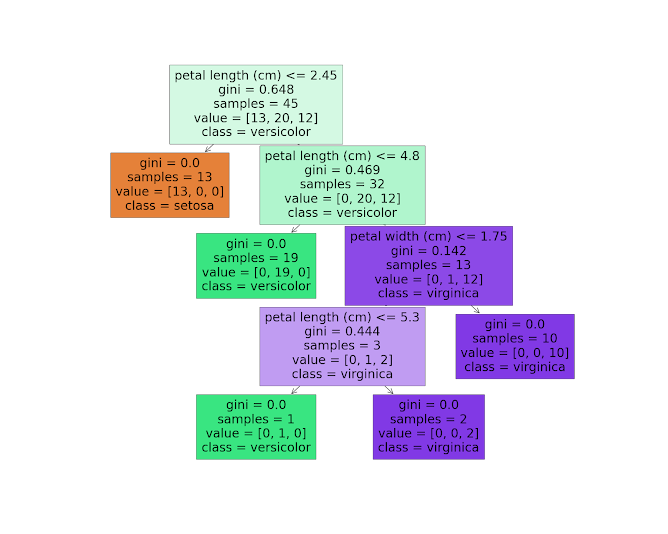

7.2 Visualize the Decision Tree on Testing Data

|--- feature_2 <= 2.45

| |--- class: 0

|--- feature_2 > 2.45

| |--- feature_2 <= 4.80

| | |--- class: 1

| |--- feature_2 > 4.80

| | |--- feature_3 <= 1.75

| | | |--- feature_2 <= 5.30

| | | | |--- class: 1

| | | |--- feature_2 > 5.30

| | | | |--- class: 2

| | |--- feature_3 > 1.75

| | | |--- class: 2

7.3 Visualize the Decision Tree on Overall Data

|--- feature_2 <= 2.45

| |--- class: 0

|--- feature_2 > 2.45

| |--- feature_3 <= 1.75

| | |--- feature_2 <= 4.95

| | | |--- feature_3 <= 1.65

| | | | |--- class: 1

| | | |--- feature_3 > 1.65

| | | | |--- class: 2

| | |--- feature_2 > 4.95

| | | |--- feature_3 <= 1.55

| | | | |--- class: 2

| | | |--- feature_3 > 1.55

| | | | |--- feature_0 <= 6.95

| | | | | |--- class: 1

| | | | |--- feature_0 > 6.95

| | | | | |--- class: 2

| |--- feature_3 > 1.75

| | |--- feature_2 <= 4.85

| | | |--- feature_1 <= 3.10

| | | | |--- class: 2

| | | |--- feature_1 > 3.10

| | | | |--- class: 1

| | |--- feature_2 > 4.85

| | | |--- class: 2

No comments:

Post a Comment